5757 S. University Ave.

Chicago, IL 60637

Main: 773.702.5599

bfi.uchicago.edu

WORKING PAPER · NO. 2024-65

Financial Statement Analysis with Large

Language Models

Alex G. Kim, Maximilian Muhn, and Valeri V. Nikolaev

MAY 2024

Financial Statement Analysis with

Large Language Models

Alex G. Kim

1

Maximilian Muhn

2

Valeri V. Nikolaev

3

This draft: May 20, 2024

Abstract

We investigate whether an LLM can successfully perform financial statement analy-

sis in a way similar to a professional human analyst. We provide standardized and

anonymous financial statements to GPT4 and instruct the model to analyze them to

determine the direction of future earnings. Even without any narrative or industry-

specific information, the LLM outperforms financial analysts in its ability to predict

earnings changes. The LLM exhibits a relative advantage over human analysts in sit-

uations when the analysts tend to struggle. Furthermore, we find that the prediction

accuracy of the LLM is on par with the performance of a narrowly trained state-of-

the-art ML model. LLM prediction does not stem from its training memory. Instead,

we find that the LLM generates useful narrative insights about a company’s future

performance. Lastly, our trading strategies based on GPT’s predictions yield a higher

Sharpe ratio and alphas than strategies based on other models. Taken together, our

results suggest that LLMs may take a central role in decision-making.

Keywords: Financial statement analysis, Large language models, GPT4, chain-of-

thought, neural network, asset pricing, earnings, direction of earnings changes, analysts

JEL Codes: G12, G14, G41, M41

Companion App: To showcase the capabilities of LLMs for financial statement anal-

ysis, we created an interactive Companion App.

*

1

The University of Chicago, Booth School of Business, alex.kim@chicagobooth.edu

2

The University of Chicago, Booth School of Business, maximilian.muhn@chicagobooth.edu

3

The University of Chicago, Booth School of Business, valeri.nikolaev@chicagobooth.edu

We appreciate insightful comments from Bok Baik, Mark Bradshaw, Joachim Gassen, Ralph Koijen,

Laurence van Lent, Christian Leuz, and Sanjog Misra, and workshop participants at the Bernstein Quanti-

tative Finance Conference, University of Chicago, University of North Carolina at Chapel Hill, 2024 Tuck

Accounting Spring Camp at Dartmouth, and Korean-American Accounting Professors’ Association. Yijing

Zhang provided excellent research assistance. The authors gratefully acknowledge financial support from the

University of Chicago Research Support Center, Fama-Miller Center for Finance Research, and the Stevens

Doctoral Program at the University of Chicago Booth School of Business.

*

This app requires ChatGPT Plus subscription and relies on a different prompt that integrates narrative

context while processing 10-Ks and 10-Qs step-by-step. The downside of this functionality is that it is more

prone to retrieval errors and the accuracy of information must be verified.

Financial Statement Analysis with Large Language Models 1

1 Introduction

Can large language models (LLMs) make informed financial decisions or are they simply

a support tool? Their advanced capabilities to analyze, interpret, and generate text enable

LLMs to excel across a wide range of tasks, including summarization of complex disclosures,

sentiment analysis, information extraction, report generation, compliance verification, etc.

(e.g., Bernard et al., 2023; Bybee, 2023; Choi and Kim, 2023; Kim et al., 2023a,b; Lopez-Lira

and Tang, 2023). All these tasks, however, involve the textual domain and require specialized

training or fine-tuning of the model.

1

The boundaries of this disruptive technology outside

of the textual domain and with respect to more general tasks that require numeric analysis

and judgment are yet to be understood. We probe these boundaries in the financial analysis

domain.

We study whether an LLM can successfully perform financial statement analysis in a

way similar to what professional human analysts do. The answer to this question has far-

reaching implications for the future of financial analysis and whether financial analysts will

continue to be the backbone of informed decision-making in financial markets. The answer

is far from obvious, given that an LLM lacks the deep understanding of the financials of a

company that a human expert would have. Further, one of the most challenging domains for a

language model is the numerical domain, where the model needs to carry out computations,

perform human-like interpretations, and make complex judgments (Brown et al., 2020).

While LLMs are effective at textual tasks, their understanding of numbers typically comes

from the narrative context and they lack deep numerical reasoning or the flexibility of a

human mind.

Financial statement analysis (FSA), sometimes referred to as fundamental analysis, is

a particularly useful setting to examine the role of LLMs in future decision-making. Tra-

ditionally, financial statement analysis is performed by financial analysts and investment

professionals with the primary objective to understand the financial health of a company

and determine whether its performance is sustainable. Unlike a typical task performed by

an LLM, FSA is a quantitative task that involves analyzing trends and ratios. At the same

time, it also requires critical thinking, reasoning, and ultimately, complex judgments. Im-

portantly, unlike in other applications, such as answering bar or CPA exam questions (Choi

et al., 2022; Eulerich et al., 2023), an LLM cannot rely on its memory for the correct answer.

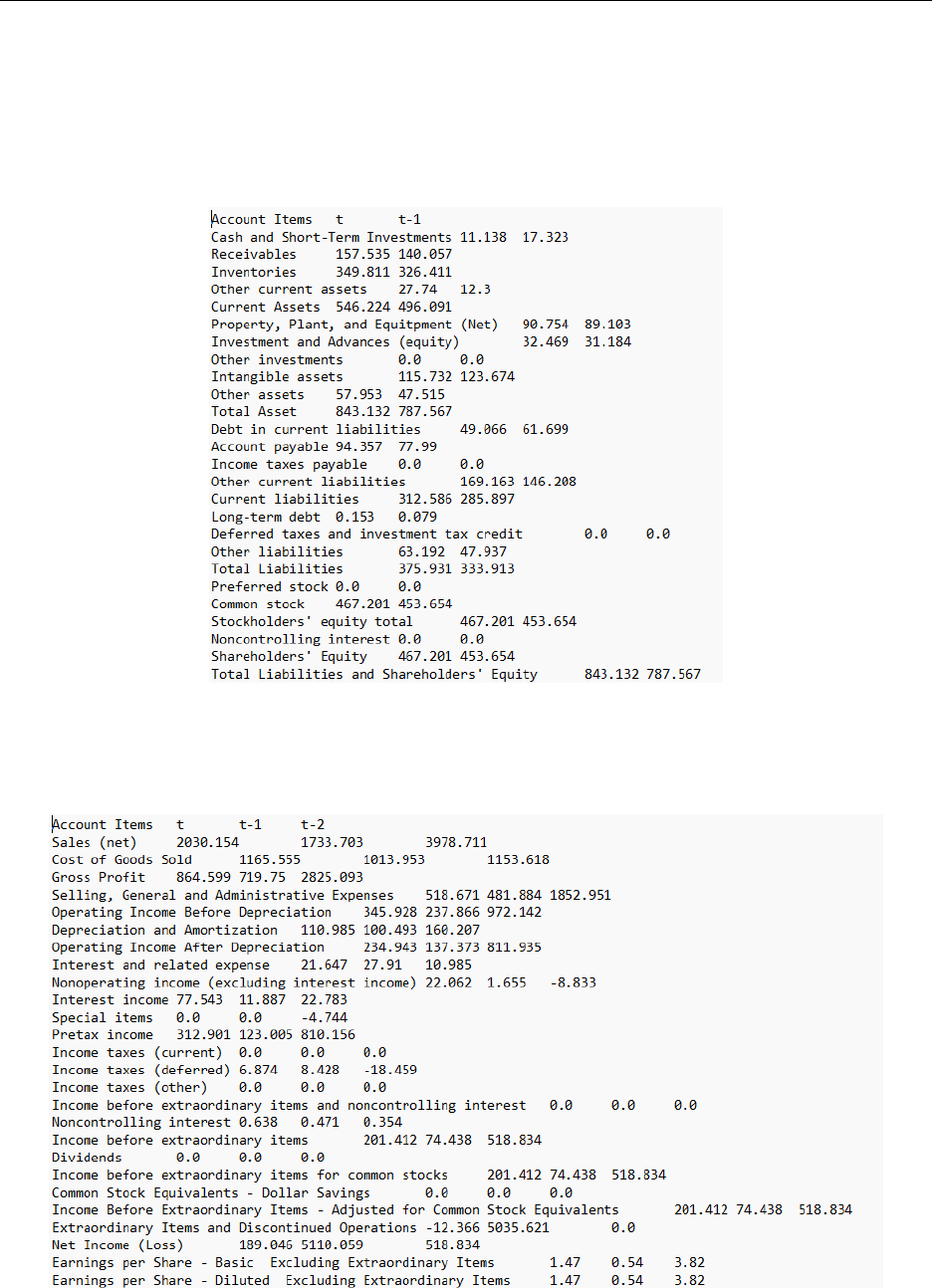

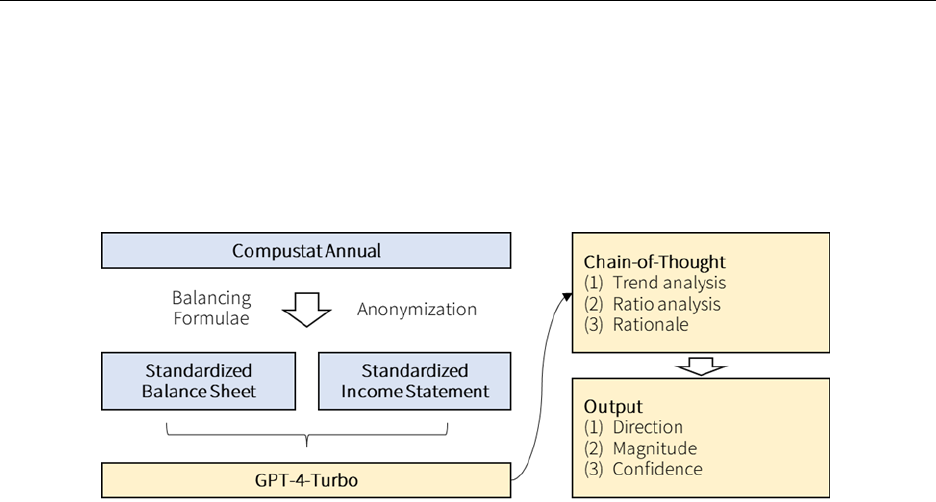

Our research design involves passing a balance sheet and income statement in a stan-

dardized form to the large language model, GPT 4.0 Turbo, and asking the model to analyze

1

For example, to be able to efficiently summarize texts, an LLM is trained on a large corpus of documents

that involve summaries typically generated by humans

Financial Statement Analysis with Large Language Models 2

them. In particular, based on the analysis of the two financial statements, the model must

decide whether a firm’s economic performance is sustainable and, more specifically, whether

a company’s earnings will grow or decline in the following period. We focus on earnings

because they are the primary variable forecasted by financial analysts and fundamental for

valuation (Penman and Sougiannis, 1998; Penman, 2001; Monahan et al., 2018).

A key research design choice that we make is to not provide any textual information

(e.g., Management Discussion and Analysis) that typically accompanies financial statements.

While textual information is easy to integrate, our primary interest lies in understanding the

LLMs’ ability to analyze and synthesize purely financial numbers. We use this setup to

examine several research questions.

First, can a large language model generate economic insights purely from the numbers

reported in financial statements absent any narrative context? How does an LLM’s per-

formance compare to that of human analysts and do they add incremental value? Can

the model’s performance be enhanced via instructions that emulate steps typically followed

by financial analysts? How does LLM’s performance compare to other benchmarks, such

as logistic regression and a state-of-the-art ANN design, and whether the model can offer

additional insights?

Conceptually, an LLM can add value relative to a human analyst due to its ability

to quickly analyze large quantities of unstructured data and a vast knowledge base that

enables to model to recognize patterns, e.g., familiar business situations, in the data. It is

not obvious, however, that these considerations are particularly relevant for the present task.

In fact, there are a number of reasons to expect that professional analysts will outperform a

machine-based approach to financial statement analysis. First, financial statement analysis

is a complex and loosely defined task that involves ambiguity and requires common sense,

intuition, and flexibility of the human mind. Second, it requires reasoning and judgment that

machines presently lack. Finally, it necessitates a broader understanding of the industry and

macro-economy.

When compared to a narrowly specialized ML application, such as an artificial neural

net (ANN) trained for earnings prediction, an LLM also appears to be at a serious disadvan-

tage. Training a specialized ANN allows the model to learn deep interactions that contain

important cues that cannot be easily gathered by the general-purpose model without pro-

viding additional insights or context. Nevertheless, an LLM’s advantage potentially lies in

its vast knowledge and general understanding of the world, such as business concepts and

investment theories that allow it to emulate deductive reasoning performed by humans. This

could include intuitive reasoning and forming hypotheses based on incomplete information

or previously unseen scenarios.

Financial Statement Analysis with Large Language Models 3

Our approach to testing an LLM’s performance involves two steps. First, we anonymize

and standardize corporate financial statements to prevent the potential memory of the com-

pany by the language model. In particular, we omit company names from the balance sheet

and income statement and replace years with labels, such as t, and t − 1. Further, we

standardize the format of the balance sheet and income statement in a way that follows

Compustat’s balancing model. This approach ensures that the format of financial state-

ments is identical across all firm-years so that the model does not know what company or

even time period its analysis corresponds to.

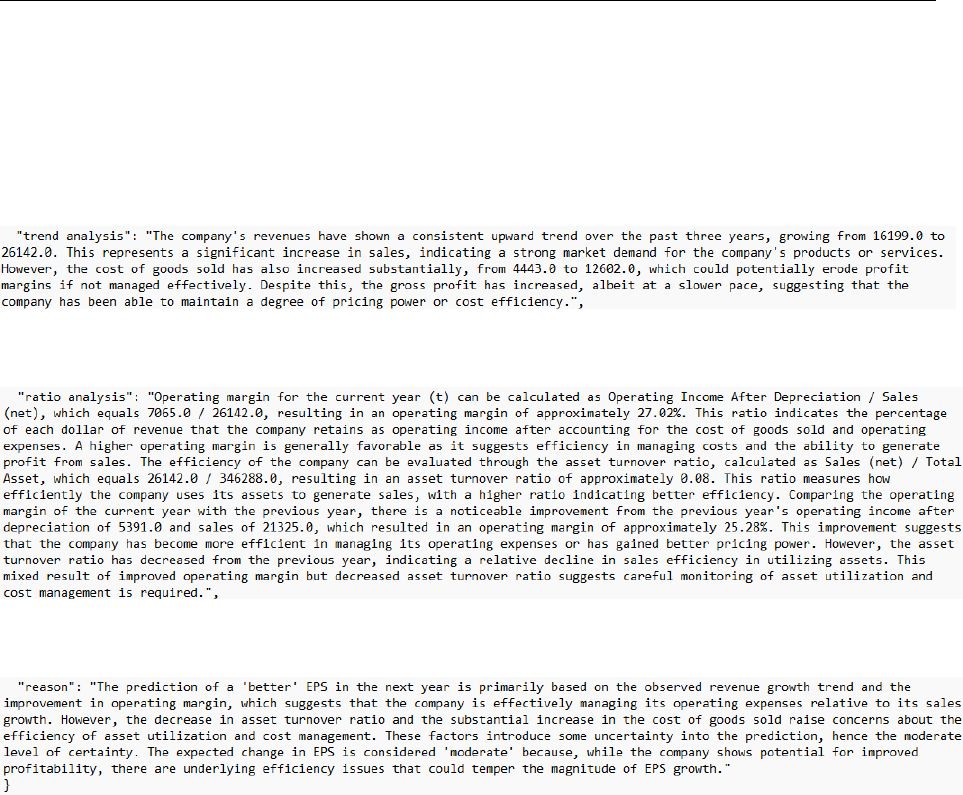

In the second stage, we design prompts that instruct the model to perform financial

statement analysis and, subsequently, to determine the direction of future earnings.

2

In

addition to a simple prompt, we develop a Chain-of-Thought (CoT) prompt that effectively

“teaches” the model to mimic a financial analyst.

3

In particular, as a part of their analysis,

financial analysts identify notable trends in financial statement line items, compute key

financial ratios (e.g., operating efficiency, liquidity, and (or) leverage ratio), synthesize this

information, and form expectations about future earnings (Bouwman et al., 1987). Our

CoT prompt implements this thought process via a set of instructions ultimately making

a determination of whether next year’s earnings will increase or decrease compared to the

current year.

We test the model’s performance using the Compustat universe and, when necessary,

intersect it with the IBES universe. The full sample spans the 1968-2021 period and includes

150,678 firm-year observations from 15,401 distinct firms. The analyst sample spans the

1983-2021 period with 39,533 observations from 3,152 distinct firms. Our target variable

across all models is a directional change in future earnings. To evaluate analysts’ prediction

accuracy, we compute consensus forecasts (the median of individual analyst forecasts issued

in the month following the release of financial statements) and use them as an expectation for

the following year’s earnings. This ensures the comparability of analysts’ forecasts and model

prediction results.

4

In addition, we also use three-month and six-month ahead consensus

forecasts as alternative expectation benchmarks. These benchmarks disadvantage the LLM

as they incorporate the information acquired during the year. However, because analysts

2

Focusing on predicting the direction of future earnings provides a specific and measurable objective,

facilitating the benchmarking of the model’s performance. It is also consistent with early and more recent

literature on this topic (e.g., Ou and Penman, 1989; Chen et al., 2022). Additionally, the focus on a binary

variable is also motivated by the notion that most key decisions performed by humans are binary in nature

(e.g., Kahneman, 2011).

3

Chain-of-thought prompts are known to enhance the model’s problem-solving capability and induce

human-like reasoning (Wei et al., 2022).

4

Since the quantitative models use only financial statement variables, we thus align the timing of human

forecasts with the timing of AI-based forecasts.

Financial Statement Analysis with Large Language Models 4

may be sluggish to incorporate new information into their forecasts, we report them for

comparison purposes.

We start by analyzing GPT’s performance compared to security analysts in predicting the

direction of future earnings (Ou and Penman, 1989). At the outset, we note that predicting

changes in EPS is a highly complex task as the EPS time series are approximated by a random

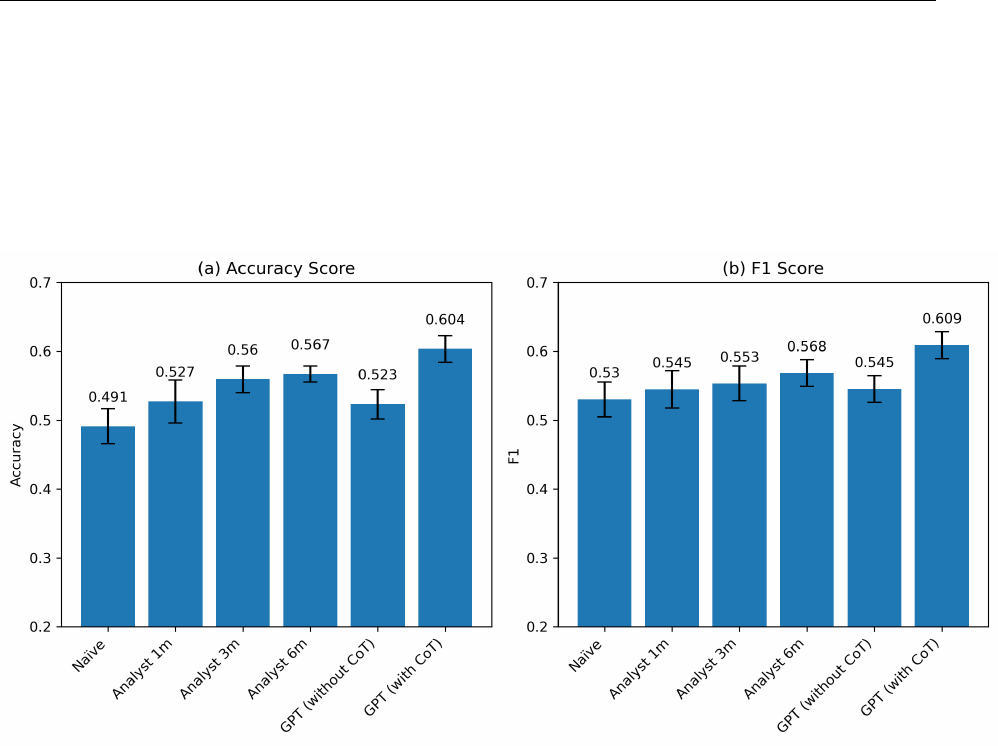

walk and contain a large unpredictable component. We find that the first-month analysts’

forecasts achieve an accuracy of 53% in predicting the direction of future earnings, which

dominates the 49% accuracy of a naive model that extrapolates the prior year’s change.

5

Three- and six-month ahead forecasts achieve a meaningfully higher accuracy of 56% and

57% respectively, which is intuitive given that they incorporate more timely information.

A “simple” non-CoT prompt GPT-based forecasts achieve a performance of 52%, which

is lower compared to the analyst benchmarks, which is in line with our prior. However, when

we use the chain of thought prompt to emulate human reasoning, we find that GPT achieves

an accuracy of 60%, which is remarkably higher than that achieved by the analysts. Similar

conclusions follow if we examine the F1-score, which is an alternative metric to evaluate a

model’s forecasting ability (based on a combination of its precision and recall). This implies

that GPT comfortably dominates the performance of a median financial analyst in analyzing

financial statements to determine the direction a company is moving in.

We probe deeper to understand the strengths and weaknesses of humans relative to an

LLM. Intuitively, human analysts may rely on soft information or a broader context not

available to the model and thus add value (Costello et al., 2020; Liu, 2022). Indeed, we

find that analysts’ forecasts contain useful insights about future performance not captured

by GPT. Furthermore, we show that when humans struggle to come up with the future

forecast, GPT’s insights are more valuable. Similarly, in the instances where human forecasts

are prone to biases or inefficiency (i.e., not incorporating information rationally), GPT’s

forecasts are more useful in predicting the direction of future earnings.

As human forecasts are known to exhibit statistical biases (Abarbanell and Bernard, 1992;

Basu and Markov, 2004), it is also interesting to examine GPT’s performance relative to

specialized ML applications trained specifically to predict earnings based on a large dataset.

We examine three such forecasting models. The first model follows Ou and Penman (1989)

and relies on a stepwise logistic regression model with 59 predictors.

6

Our second model

is an artificial neural network (ANN) that uses the same 59 predictors but also leverages

5

This finding is consistent with Bradshaw et al. (2012), who show that analysts are superior in predicting

one-year ahead earnings.

6

We exclude predictors that rely on stock prices, in particular the P/E ratio, because balance sheets

and income statements do not contain stock price information. This exclusion ensures comparability of our

benchmark. The results are qualitatively similar, however, if we include this variable.

Financial Statement Analysis with Large Language Models 5

non-linearities and interactions among them. Third, to ensure consistency between GPT and

ANN, we also use the ANN model trained on the same information set (the income statement

and balance sheet) that we provide to GPT. Importantly, we train these models each year

based on five years of historical data using a population of observations on Compustat. All

forecasts are out of sample.

7

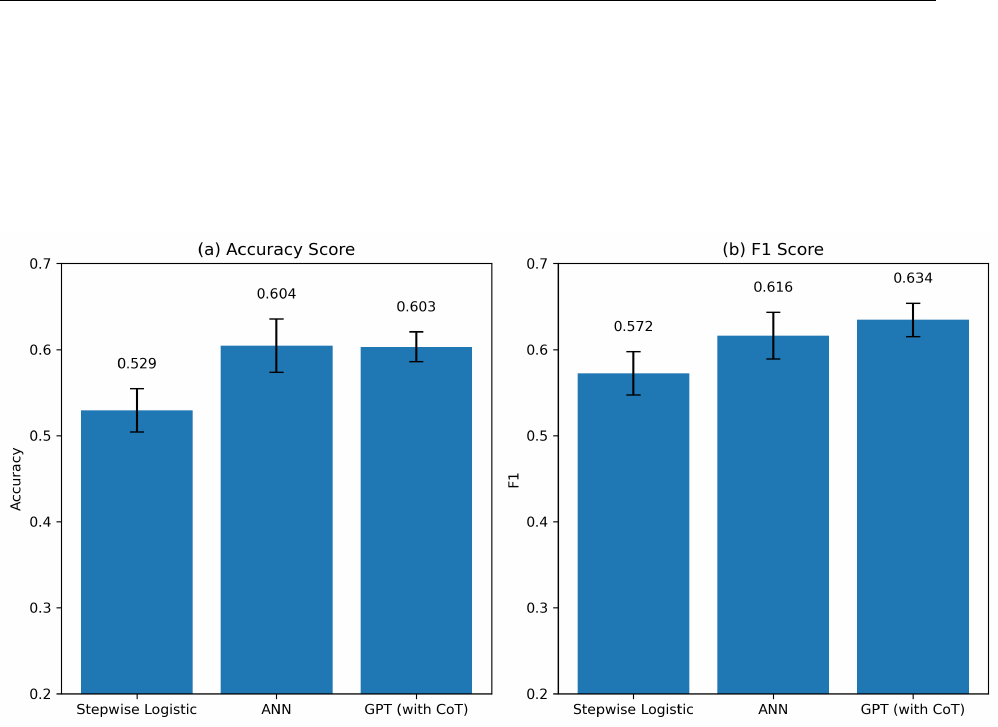

Using the entire Compustat sample, we find that the stepwise logistic regression achieves

an accuracy (F1-score) of 52.94% (57.23%), which is on par with human analysts and consis-

tent with the prior literature (Ou and Penman, 1989; Hunt et al., 2022). In contrast, ANN

trained on the same data achieves a much higher accuracy of 60.45% (F1-score 61.62), which

is in the range of the state-of-the-art earnings prediction models. When we use GPT CoT

forecasts, we observe that the model achieves an accuracy of 60.31% on the entire sample,

which is very similar to the ANN’s accuracy. In fact, GPT exhibits a meaningfully higher F1

score compared to the ANN (63.45% vs. 61.6%). When we train the ANN exclusively using

the data from the two financial statements (fed into GPT), which is a smaller information

set, we find that ANN’s predictive ability is slightly lower, with an accuracy (F1-score) of

59.02% (60.66%), compared to GPT’s performance. Overall, these findings suggest that

GPT’s accuracy is on par (or even slightly higher) than the accuracy of narrowly specialized

state-of-the-art machine learning applications. This is a somewhat surprising result because

specialized models are trained to leverage information most efficiently. It indicates a remark-

able aptitude a pre-trained large language model possesses to analyze financial statements

and even more so given that we do not provide any textual disclosures, such as MD&A.

We further observe that ANN’s and GPT’s predictions are complementary in that both

of them contain useful incremental information with some indication that GPT tends to

do well when ANN struggles. In particular, ANN predicts earnings based on the training

examples it saw in the past data, and given that many of the examples are complex and

highly multidimensional, its learning capacity may be limited. In contrast, GPT makes

relatively fewer mistakes when predicting the earnings of small or loss-making companies,

likely benefiting from its human-like reasoning and extensive knowledge. This ability to draw

upon a broader range of knowledge provides a distinct advantage for the language model.

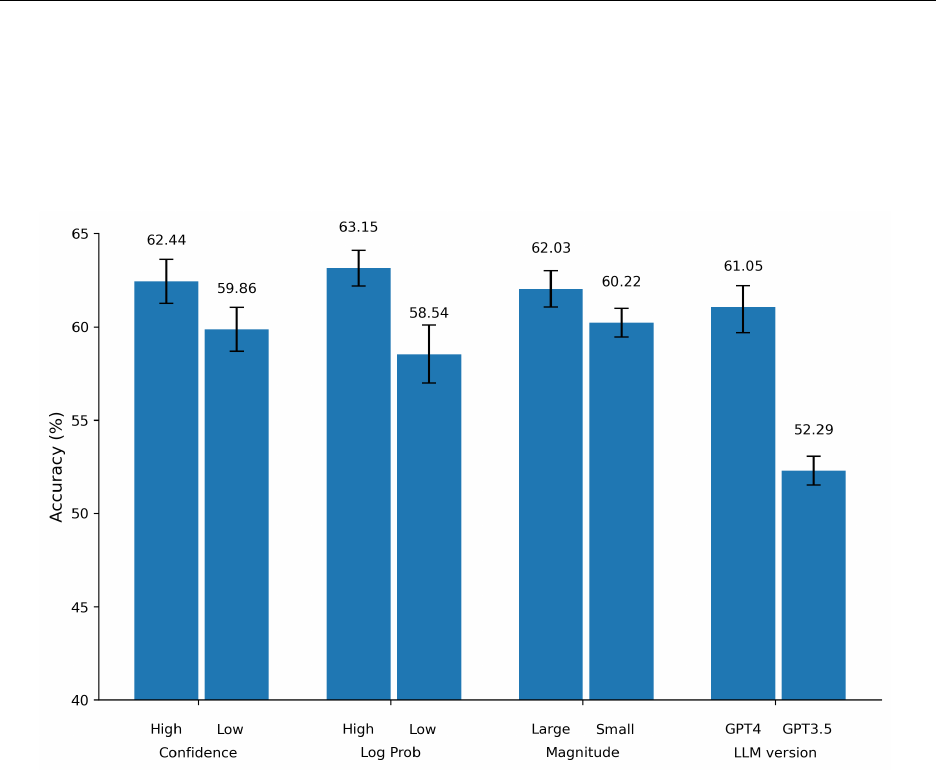

We perform several additional experiments partitioning the samples based on GPT’s

confidence in its answers, and using different families of LLMs. When GPT answers with

higher confidence, the forecasts tend to be more accurate than less confident forecasts. We

also find that the earlier version, GPT3.5, shows considerably less impressive performance,

suggesting that our main results should not be taken for granted. At the same time, we show

7

We use five years to allow the model’s parameters to change over time, which helps to ensure accuracy.

We also experimented with longer windows and found similar results

Financial Statement Analysis with Large Language Models 6

that the results generalize to other LLMs. In particular, Gemini Pro, recently released by

Google, achieves a similar level of accuracy compared to GPT 4.

Given the documented consistently impressive LLM’s performance in fundamental anal-

ysis, it is interesting to understand why the model is so successful. We examine two broad

hypotheses. The first hypothesis is that GPT’s performance is driven by its (possibly near-

perfect) memory. It would be especially problematic if GPT could somehow infer the com-

pany’s identity and year from the data and match this information with the sentiment about

this company learned from newspaper articles or press releases. We aim to rule out this

hypothesis (see Section 6.1). Furthermore, we replicate our results using the most recent

year of data, which lies outside GPT4’s training period (i.e., pure out-of-sample tests).

Our second hypothesis is that GPT generates useful insights based on which the model

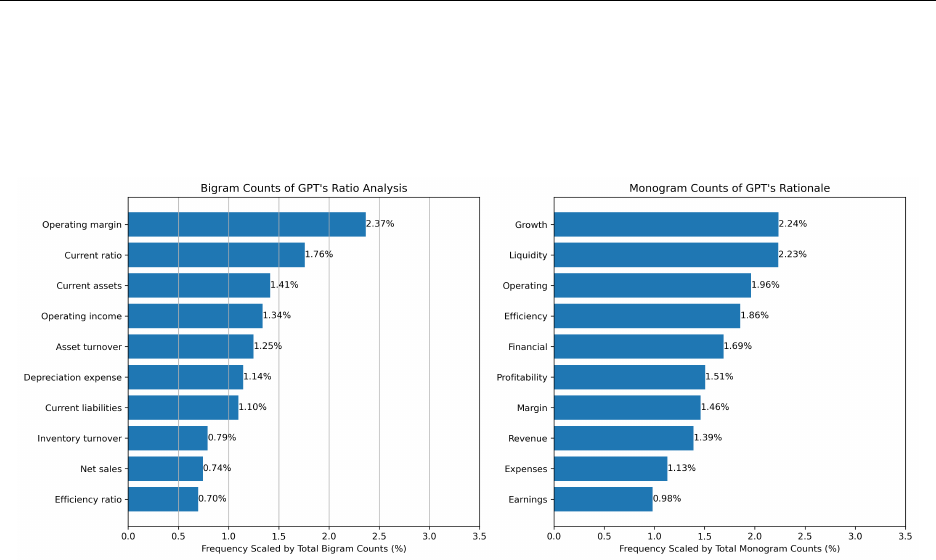

infers the direction of future earnings. For example, we observe that the model frequently

computes standard ratios computed by financial analysts and, as instructed by CoT prompt,

generates narratives that analyze these ratios. To test this, we pool all narratives generated

by the model for a given firm-year and encode them into 768-dimensional vectors (embed-

dings) using BERT. We then feed these vectors into an ANN and train it to predict the

direction of future earnings. We find that the ANN trained on the GPT’s narrative insights

achieves an accuracy of 59%, which is almost as high as the GPT forecast accuracy (60%).

In fact, the embedding-based ANN achieves an F1-score that is higher than GPT’s (65%

vs. 63%). This result presents direct evidence that the narrative insights generated by the

model are informative about future performance. Further, we observe a 94% correlation

between GPT’s forecasts and ANN forecasts based on the GPT’s narratives, suggesting that

the information encoded by these narratives is the basis for GPT’s forecasts. We also find

that narratives related to ratio analysis, in particular, are most important in explaining

the direction of future earnings. In sum, the narratives derived from CoT reasoning are

responsible for the model’s superior performance.

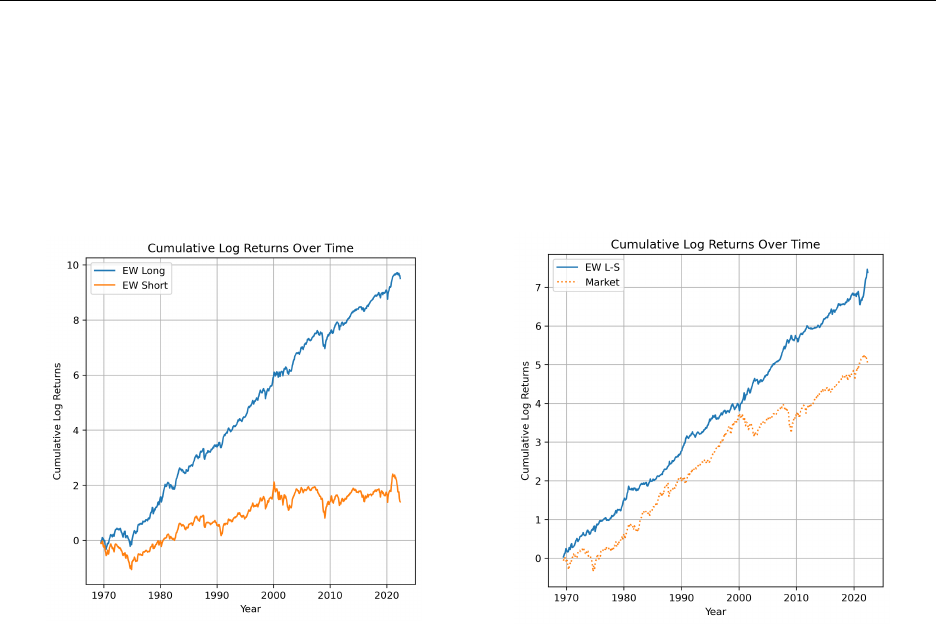

Finally, we explore the economic usefulness of GPT’s forecasts by analyzing their value

in predicting stock price movements. We find that the long-short strategy based on GPT

forecasts outperforms the market and generates significant alphas and Sharpe ratios. For

example, alpha in the Fama-French three-factor model exceeds 12% per year. GPT stands

out for doing particularly well in predicting the returns for small companies, as compared to

ANN-based strategies.

8

We make several contributions to the literature. First, to the best of our knowledge, we

8

This finding aligns with our earlier result that GPT is relatively better in predicting earnings for smaller

companies compared to ANNs. Given that GPT’s training dataset likely contained a disproportionate

amount of information from larger firms, this result further challenges the notion that GPT’s performance

is merely a function of its memory.

Financial Statement Analysis with Large Language Models 7

are the first to provide large-scale evidence on LLM’s ability to analyze financial statements

– a complex task that is traditionally performed by human analysts. We show that an LLM

can generate state-of-the-art inferences about the direction of the company, outperforming

financial analysts and prior models and generating valuable insights along the way. Im-

portantly, we show that the language model can successfully analyze numbers in financial

statements without any narrative context.

Second, our results provide evidence on the limits of LLMs. In particular, the boundaries

of generative AI to successfully perform tasks outside of their native domain are not well

understood. We find that an LLM excels in a quantitative task that requires intuition

and human-like reasoning. The ability to perform tasks across domains points towards the

emergence of Artificial General Intelligence. Broadly, our analysis suggests that LLMs can

take a more central place in decision-making than what is previously thought.

Third, we contribute to the literature on fundamental analysis. Starting from Ou and

Penman (1989), there is a large literature in accounting that focuses on earnings prediction

based on accounting fundamentals (for example, Bochkay and Levine, 2019; Hunt et al.,

2022; Chen et al., 2022). In particular, Chen et al. (2022) predict the direction of earnings

changes using tree-based machine learning models trained on over 12,000 exploratory vari-

ables based on firms’ XBRL tags.

9

We use a novel approach to analyze financial information

to derive insights about future performance. In particular, we show that an LLM-based

financial statement analysis, by drawing on vast knowledge and chain-of-thought reasoning,

complements humans as well as specialized models in generating value-relevant information.

In that sense, we also contribute to the recent literature on the relative advantage of humans

versus AI in financial markets (Costello et al., 2020; Liu, 2022; Cao et al., 2024).

2 Conceptual Underpinnings

Financial statement analysis, or fundamental analysis, has long been considered of crit-

ical importance for informed decision-making (e.g., Graham and Dodd, 1934). It uses the

numbers reported in financial statements to gain insights into the financial health of the

company, aiming to reveal information about a firm’s future prospects and valuation (Ou

and Penman, 1989; Piotroski, 2000; Sloan, 2019).

Financial statement analysis underlies the work performed by financial analysts, who

play a pivotal role in financial markets.

10

One of their primary tasks involves predicting

9

The observed variation in prediction accuracy relative to Chen et al. (2022) can be attributed to the

considerably fewer predictive variables included in our sample. Additionally, when our analysis is confined

to firms examined in Chen et al. (2022), the prediction accuracy of GPT notably increases to 64%.

10

Analysts are often formally trained in financial statement analysis. For example, financial statement

Financial Statement Analysis with Large Language Models 8

firms’ earnings, which serves both as an input in their own stock market recommendations

and an output that informs investors (Stickel, 1991; Brown et al., 2015). When making

earnings forecasts, their work typically begins with a systematic analysis of financial state-

ments (Bouwman et al., 1987), often using standardized templates to ensure consistency and

accuracy. This analysis enables financial analysts to establish a baseline understanding of

a company’s financial position and performance, assessing factors such as operating perfor-

mance or capital structure. They then contextualize this financial data by drawing upon

their industry and private knowledge about the firm before issuing their forecasts (Brown

et al., 2015). The accuracy and quality of these forecasts not only drive market percep-

tions but also are fundamental to analysts’ career advancement and job security (Basu and

Markov, 2004; Groysberg et al., 2011).

Prior research generally concludes that sell-side analysts outperform time series models in

terms of producing credible annual earnings forecasts (e.g., Bradshaw, 2011). Consequently,

these forecasts are frequently used as a proxy for markets’ earnings expectations. At the same

time, prior research has shown that financial analysts produce potentially erroneous or biased

estimates (Bradshaw, 2011; Kothari et al., 2016). For example, Green et al. (2016) show that

analysts make technical errors and questionable economic judgments when evaluating firms

with quantitative methods. Evidence from De Bondt and Thaler (1990) or Bordalo et al.

(2019) suggest that financial analysts overreact to recent events. These mistakes and biases

highlight the complexity of processing information efficiently when large volumes of data are

involved.

Recognizing these challenges in conventional financial forecasting and human information

processing, general-purpose language models, such as ChatGPT, hold promise in facilitat-

ing financial statement analysis and the associated tasks such as earnings forecasting and

decision-making more generally. These advanced AI systems are noted for their expansive

knowledge across various domains and ability to quickly and efficiently process large quanti-

ties of data (Achiam et al., 2023). For example, their proficiency extends to answering CFA

or CPA exam questions (Eulerich et al., 2023), demonstrating their financial knowledge and

potential for understanding theories. In a similar vein, prior literature has shown that these

models are capable of efficiently processing large sets of financial data (e.g., Kim et al.,

2023b,a). LLMs have also shown promise in predicting certain economic outcomes. Lopez-

Lira and Tang (2023) and Jiang et al. (2022) show that GPT can explain short-term stock

returns based on newspaper headlines and Bybee (2023) finds that GPT’s macroeconomic

prediction aligns well with the expert survey results. In addition, Hansen and Kazinnik

(2023) document that GPT can understand the political stance of FOMC announcements

analysis is a major part of the Level I CFA exam.

Financial Statement Analysis with Large Language Models 9

and relate it to future macroeconomic shocks.

However, despite the successes of large language models in many tasks, they are pri-

marily viewed as a support tool and their ability to act autonomously to perform financial

statement analysis at a level of a human analyst faces significant challenges. First, financial

statement analysis is a broad task that is more of an art than science, whereas machines

typically excel in narrow, well-defined tasks. It requires common sense, intuition, ability

to reason and make judgements, ability to handle situations unseen previously. Second,

LLM is not trained to analyze financial information, e.g., in the same way they are trained

to summarize text or answer questions. In fact, inputs into the tasks performed by LLMs

have been predominantly qualitative and language-based, and, LLMs have struggled with

understanding numeric domain (Brown et al., 2020). Third, humans are more capable of

incorporating their knowledge of broader context – something a machine often cannot do –

by taking into account soft information, knowledge of the industry, regulatory, political, and

macroeconomic factors. These factors stack up against the odds that an LLM can achieve a

human like performance in analyzing financial statements.

11

An alternative to utilizing a general-purpose large language model for financial statement

analysis involves specifying a more narrow objective, such as earnings prediction, and training

a specialized ML model, such as Artificial Neural Network (ANN), to perform this task.

Unlike the general-purpose large language models, which are trained to predict the next word

in a textual sequence, ANNs learn deep interactions among a large number of predictors to

deliver powerful forecasts of the target variable.

12

Because LLMs are not trained to uncover

these complex relationships among predictors, they are fundamentally disadvantaged relative

to the specialized models in a specific prediction task. Nevertheless, the effectiveness of these

ANNs can be limited if they encounter patterns not observed during training with sufficient

frequency. This is where theoretical knowledge or general understanding of how the world

works becomes essential, as does the value of human experience, intuition, and judgment.

This grants possibly an important advantage to an LLM due to its training on a vast body of

general knowledge that encompasses a multitude of business cases and situations, financial

theories, and economic contexts. This broader theoretical foundation potentially allows

LLMs to infer insights even from unfamiliar data patterns, providing an advantage in the

complex domain of financial analysis.

11

These more complex quantitative tasks have been traditionally seen as outside of the LLM’s “technolog-

ical frontier” (e.g. Dell’Acqua et al., 2023). Consistent with this argument, Li et al. (2023) processes earnings

press releases and finds that GPT performs worse in predicting earnings relative to sell-side analysts.

12

Over time, methods for predicting earnings have progressively advanced within the accounting litera-

ture. Ou and Penman (1989) predict earnings changes using a stepwise logistic regression model that uses

approximately 60 accounting variables as input. Most recently, Chen et al. (2022) use 13,881 in-line XBRL

tags and tree-based machine learning models to predict future earnings.

Financial Statement Analysis with Large Language Models 10

3 Methodology and Data

In this section, we outline how we approach the primary task of using an LLM to analyze

and predict earnings changes. Earnings prediction is a complex task that combines qualita-

tive and quantitative analyses and involves professional judgment. We model how analysts

make earnings predictions with a chain-of-thought prompt using GPT 4.

3.1 Financial Statement Analysis and Earnings Prediction

Overview Earnings prediction derived from financial statement analysis is of considerable

importance to accounting information users. For example, such predictions help investors

to make inferences about the cross-section of expected stock returns (Fama and French,

2015) or to pick the best-performing stocks (Piotroski, 2000). However, earnings are hard

to predict as they are influenced by many exogenous factors such as macroeconomic shocks

(Ball et al., 2022), product market demand shocks, changes in accounting standards (Ball

et al., 2000), and many other factors. Therefore, predicting earnings is challenging even for

state-of-the-art ML models (see Bochkay and Levine, 2019; Chen et al., 2022, for example).

Financial analysts approach this complex task by performing financial statement analysis.

They first analyze financial statements, identifying notable changes or trends in accounting

information. They choose which financial ratios to compute to obtain further insights. Their

analysis is enriched by contextual information, such as industry information, understanding

of the competitive landscape, and macroeconomic conditions (Bouwman et al., 1987). Based

on this information, they apply professional judgments to determine whether a company’s

earnings will grow or contract in the future.

In this study, we specifically focus on a relatively narrow information set that includes

numerical information reported on the face of two primary financial statements. While

this lacks textual information or broader context and thus puts an LLM at a disadvantage

relative to a human, it presents a well-defined information set of exclusively numeric data.

This approach allows us to test the limits of the model when analyzing financials and deriving

insights from the numeric data – something that an LLM is not designed nor trained to do.

To approach FSA-based earnings prediction based on a Large Language Model, we im-

plement two types of prompts. First, we use a “simple” prompt that instructs an LLM to

analyze the two financial statements of a company and determine the direction of future

earnings. This prompt does not provide further guidance on how to approach the prediction

task, however.

13

Second, we implement a Chain-of-Thought prompt that breaks down the

13

In particular, we simply present a standardized and anonymous balance sheet and income statement

and ask the model to predict whether earnings will increase or decrease in the subsequent period.

Financial Statement Analysis with Large Language Models 11

problem into steps that parallel those followed by human analysts. This prompt effectively

ingrains the methodology into the model, guiding it to mimic human-like reasoning in its

analysis. We mostly focus on the results from this second prompt in our analysis.

Human Processing and Chain-of-Thought Modern large language models can retrieve

numbers from structured tables and perform simple calculations. However, they lack the

ability to reason like a human and perform judgment. Recent research suggests that chain-

of-thought prompting can significantly enhance the reasoning and problem-solving abilities

of large language models (Wei et al., 2022).

We implement the CoT prompt as follows. We instruct the model to take on the role

of a financial analyst whose task is to perform financial statement analysis. The model

is then instructed to (i) identify notable changes in certain financial statement items, and

(ii) compute key financial ratios without explicitly limiting the set of ratios that need to be

computed. When calculating the ratios, we prompt the model to state the formulae first, and

then perform simple computations. The model is also instructed to (iii) provide economic

interpretations of the computed ratios. Then, using the basic quantitative information and

the insights that follow from it, the model is instructed to predict whether earnings are likely

to increase or decrease in the subsequent period. Along with the direction, we instruct the

model to produce a paragraph that elaborates its rationale. Overall, this set of instructions

aims to replicate how human analysts analyze financial statements to determine whether a

firm’s performance is sustainable (Bouwman et al., 1987).

In addition to the binary prediction accompanied by a rationale statement, we also

prompt the model to provide the predicted magnitude of earnings change and the confidence

in its answer (Bybee, 2023; Choi and Kim, 2023). The magnitudes contain three categories:

large, moderate, and small. The confidence score measures how certain the model is in

producing its answers and ranges from zero (random guess) to one (perfectly informed).

We use gpt-4-0125-preview, which is the most updated GPT model by OpenAI at

the time of our experiment. The temperature parameter is set to zero to ensure minimal

variability in the model’s responses. We do not specify the amount of max tokens, and

top-p sampling parameter is set to one (i.e., the most likely word is sampled by the model

with probability one). In addition, we enable the logprobs option to obtain token-level

logistic probability values. Figure 1 provides a visual illustration of GPT’s processing steps.

3.2 Data

We use the entire universe of Compustat annual financial data from the 1968 to 2021

fiscal years. We also set aside data for 2022 to predict 2023 fiscal year earnings to test for

Financial Statement Analysis with Large Language Models 12

the robustness of the model’s performance outside GPT’s training window. In particular,

the GPT-4-Turbo preview’s training window ends in April 2023, and the model cannot have

seen the earnings data of 2023, which was released in late March 2024. Following prior

literature, we require that each observation has non-missing total assets, year-end assets

value exceeding one million dollars, a year-end stock price exceeding one dollar per share,

and a fiscal period end date of December 31.

14

We also drop observations where the balance

sheet equation does not hold. These filters leave us with 150,678 observations from 15,401

distinct firms, reasonably approximating the Compustat universe.

For each firm-year, we reconstruct the balance sheet and income statement using the

data from Compustat. The format follows Capital IQ’s balancing model and is the same

across all firm years. We omit any identifying information, such as the firm name or dates of

the financial statements. This step ensures that all firm-year observations have an identical

financial statement structure. Consistent with US GAAP reporting requirements, we provide

two years of balance sheet and three years of income statement data. An example of the two

statements is provided in Appendix B.

15

For the analysis that involves analyst forecasts, we use data from IBES, starting the

sample in 1983. We extract individual forecasts and construct monthly consensus forecasts.

This analysis restricts the sample to firm-years with analyst following. We require that each

observation has at least three analyst forecasts issued, which leaves us with 39,533 firm-year

observations.

We report descriptive statistics for the variables used in our analyses in Table 1. Panel A

describes the full sample (1968-2021), and Panel B is restricted to the analyst sample (1983-

2021). The data in Panel A reveals that approximately 55.5% of observations report an actual

increase in earnings (Target). Predicted values include the prefix “Pred ” and vary depending

on the model. For example, GPT prediction (P red GP T ) implies that, on average, 53.0% of

observations will experience an increase in earnings. In Panel B, P red Analyst1m denotes

the forecasts issued within one month from the previous year’s earnings release. Analyst

forecasts indexed by 3m and 6m suffixes are defined in an analogous manner. Compared to

GPT, financial analysts tend to be slightly more pessimistic in their forecasts (fluctuating

around 52% depending on the timing of the forecasts). Panel B also reveals that companies

in the Analyst Sample are, on average, larger in size (Size), have a lower book-to-market

ratio (BtoM), higher leverage (Leverage), and lower earnings volatility (Earn Vol). However,

they are similar in terms of the actual frequency of EPS increases.

14

Focusing on December 31 firms allows for more straight-forward asset pricing tests in Section 7 and is

consistent with Ou and Penman (1989); Hunt et al. (2022).

15

Importantly, we do not train or fine-tune the LLM model on the financial statements. The model

observes only a single balance sheet and income statement at a time, as provided in Appendix B.

Financial Statement Analysis with Large Language Models 13

4 How Does an LLM Perform Compared to Financial Analysts?

In this section, we evaluate the performance of a large language model in the analysis

of financial statements aimed at predicting the direction of future earnings by using human

analysts as a benchmark. All prediction models have a binary target variable, which indicates

an increase or decrease in EPS in the subsequent year.

4.1 Prediction Methods and Evaluation Metrics

Naive model First, as a naive benchmark, we assume that the directional change in

earnings will stay the same. In particular, if EPS has increased (decreased) in year t relative

to year t − 1, the naive prediction for year t + 1 is also “increase” (“decrease”).

Analysts’ forecasts We use a consensus analyst forecasts of year t + 1 EPS published

following the announcement of year t earnings. If there are multiple forecasts issued by a

single analyst, we use the closest one to the year t earnings release dates. This approach

helps us to ensure that human analysts are making predictions of one-year-ahead earnings

based on financial statements published in the current year. Then we take the median value

of analysts’ forecasts and compare it to the actual year t EPS. We require at least three

analyst forecasts in a given firm-year to compute median values. If the median forecasted

EPS value is larger than the year t EPS, we label the prediction as “increase” and vice versa.

Analyst forecast accuracy is then obtained in an analogous manner.

As a comparison, we also collect analyst forecasts issued at least three and six months

after the release of year t financial statements. This ensures that the analysts have enough

time to process the reported financials. However, this also means that the analysts will

have access to one or two quarterly financial statements and other contextual information

generated during the year t + 1. Therefore, human analysts generally have an informational

advantage relative to the models that rely on time t information only.

Evaluation Metrics We report two common metrics to evaluate the quality of the pre-

diction method: accuracy and F1-score. Accuracy is the percentage of correctly predicted

cases scaled by the total number of predictions made. F1-score is the harmonic mean of

precision and recall. Precision measures the proportion of true positive predictions in the

total positive predictions, while recall measures the proportion of true positive predictions

out of all actual positives. In particular, F1-score is defined as follows:

F 1 =

2 × T P

2 × T P + F P + F N

(1)

Financial Statement Analysis with Large Language Models 14

where T P is the number of true positive predictions, F P is the number of false positive

predictions, and F N is the number of false negative predictions.

4.2 Main Results

Table 2 compares GPT’s prediction accuracy with that achieved by financial analysts.

Based on the first-month forecast following the release of prior year financial statements,

analysts’ accuracy is 52.71% and F1 score is 54.48% when predicting the direction of one-

year-ahead earnings. As expected, this is better than predictions based on a naive model

(accuracy = 49.11% and F1 score = 53.02%). However, these results also reiterate the

notion that changes in earnings are very hard to predict, even for sophisticated financial

analysts. As expected, the analysts’ prediction accuracy improves through the course of

the year t + 1, achieving an accuracy of 55.95% and 56.58% for month-three and month-six

forecasts, respectively.

Turning to GPT’s predictions, we observe the following: Using a simple prompt instruct-

ing GPT to analyze financial statements and predict the direction of future earnings yields an

accuracy of 52.33% and an F1-score of 54.52%. Thus, without CoT reasoning, the model’s

performance is on par with the first-month consensus forecasts by financial analysts, fol-

lowing the earnings release. However, the performance markedly improves when we utilize

CoT-based GPT forecasts. With chain-of-thought prompts, GPT achieves an accuracy of

60.35%, or a 7 percentage points increase compared to analyst predictions one month after

the earnings release. The difference is statistically significant at 1% level.

16

This edge is

particularly noteworthy since we do not provide to the language model any available to the

analysts narrative or contextual information beyond the balance sheet and income statement.

Taken together, our results suggest that GPT can outperform human analysts by per-

forming financial statement analysis even without any specific narrative contexts. Our results

also highlight the importance of a human-like step-by-step analysis that allows the model to

follow the steps typically performed by human analysts. In contrast, simply instructing the

model to analyze complex financial statements does not yield strong prediction results.

4.3 Complementarity Between Human Analysts and GPT

Given that GPT outperforms human analysts in predicting future earnings, this finding

raises the question of whether an LLM can largely replace human analysts. In our context,

humans are expected to rely on a broader information set and hence should have an advantage

over an LLM that does not have access to qualitative information, for example. More

16

GPT outperforms human analysts in terms of accuracy under 5% statistical significance.

Financial Statement Analysis with Large Language Models 15

generally, humans often rely on soft information not easily accessible to a machine (Costello

et al., 2020; Liu, 2022), which puts humans at an informational advantage. We next explore

the presence of complementarities and trade-offs related to LLM vs. human forecasts.

Sources of Incorrect Answers We start with the analysis of instances where forecasts are

erroneous. We estimate a simple linear regression to examine whether firm characteristics

have systematic associations with prediction accuracy. I(incorrect = 1) is an indicator

variable that equals one when the earnings prediction does not match the actual change in

earnings. We then estimate the following OLS regression:

I(incorrect = 1)

it

= βX

it

+ δ

year

+ δ

ind

+ ε

it

(2)

X

it

is a vector of firm i’s year t characteristics: asset size, leverage, book-to-market ratio,

earnings volatility, loss indicator, and property, plant, and equipment scaled by total assets.

δ

year

and δ

ind

denote year and industry (SIC two-digit) fixed effects, respectively. All con-

tinuous variables are winsorized at the 1% level and standard errors are clustered at the SIC

two-digit industry level.

We present the results in Table 3, Panel A, and Figure 2. In column (1), we document

that GPT’s predictions are more likely to be inaccurate when the firm is smaller in size,

has a higher leverage ratio, records a loss, and exhibits volatile earnings. These results are

intuitive and, notably, prior studies find these characteristics to be economically associated

with earnings quality.

17

For comparison, in columns (2), (3), and (4), we report the determi-

nants of analysts’ inaccurate predictions. Several interesting differences emerge compared to

column (1). First, even though analysts face difficulties in predicting small firms’ earnings,

the magnitude of these coefficients is nearly half compared to the coefficient in column (1)

(p-value is less than 1% for all three comparisons). Considering that analysts have access to

narrative information and broader context, this result is consistent with Kim and Nikolaev

(2023b), who show that context matters more for prediction tasks when the firm is smaller

in size. Another notable difference is that analysts are less likely to make errors relative to

17

Due to high fixed costs of maintaining adequate internal controls, small firms may have lower-quality

accounting earnings (Ball and Foster, 1982; Ge and McVay, 2005) and are more likely to restate their

earnings in subsequent periods (Ashbaugh-Skaife et al., 2007). High leverage ratios are often indicative of

firms being closer to debt covenant violations. Such firms might be more incentivized to engage in earnings

management to meet or beat financial thresholds, leading to lower-quality earnings (Watts and Zimmerman,

1986). Also, when firms experience unusual financial circumstances such as reporting losses, analysts tend to

perform worse than average (Hwang et al., 1996; Hutton et al., 2012). Lastly, Donelson and Resutek (2015)

document that past volatility of earnings is negatively associated with its predictive power. Considering that

GPT only uses numerical financial information as its input, these results align well with Kim and Nikolaev

(2023a,b) that contextual information becomes relatively more important when firms experience losses and

their size is small.

Financial Statement Analysis with Large Language Models 16

GPT when a firm reports a loss and exhibits volatile earnings. These findings are the same

for all analyst forecast measures as the magnitudes of the coefficients on Loss and Earn-

ings Volatility in columns (2), (3), and (4) are consistently smaller than that of column (1).

Taken together, our results show that analysts and GPT both have difficulties in predicting

the earnings of small, loss-reporting firms. However, analysts tend to be relatively better

at dealing with these complex financial circumstances than GPT, possibly due to other soft

information and additional context (Costello et al., 2020).

Incremental Informativeness We next test whether analysts’ forecasts, despite lower

accuracy, add useful insights incremental to GPT’s predictions. We regress an indicator

I(Increase = 1), which equals one when subsequent period earnings increase and zero

otherwise, on the direction of future earnings predicted by GPT and/or analysts. Specifically,

we estimate the following OLS regression:

I(Increase = 1)

it

= β

1

P red GP T

it

+ β

2

P red Analyst

it

+ δ

year

+ δ

ind

+ ε

it

(3)

where P red X is an indicator that equals one when ”X” (which is either “GPT” or “An-

alyst”) predicts an increase in earnings, and zero otherwise. δ

year

and δ

ind

are year and

industry (SIC two-digit level) fixed effects. Standard errors are clustered at the industry

level.

The results are presented in Table 3, Panel B. In column (1), we find that GPT’s predic-

tion, on a standalone basis, is positively associated with future outcomes while controlling

for industry and year-fixed effects. The same result holds for individual analysts’ forecasts

as can be seen in columns (2), (3), and (4). Consistent with the results in Table 2, analysts’

forecasts issued six months after the earnings release exhibit stronger associations with the

actual outcomes than the forecasts issued one month after the earnings release (the adjusted

R-squared in column (4) is 0.044, which is almost twice the adjusted R-squared value in

column (2)).

In columns (5), (6), and (7), we include both GPT and analyst forecasts simultaneously

in a single regression. Across all models, both coefficients are statistically significant. We

observe that the coefficient on GPT is largely unchanged (its t-statistics marginally decreases

from 2.99 to 2.67) and the coefficient on analysts’ predictions increases in magnitude when

both variables are used simultaneously (e.g., from 0.073 in column (2) to 0.110 in column (5)).

The adjusted R-squared value also increases from 0.070 in column (1) to 0.089 in column

(5). These results indicate that GPT and human analysts are complementary, corroborating

Financial Statement Analysis with Large Language Models 17

our results in Table 3.

Does GPT Do Well When Humans Struggle? To explore the relative advantage of

an LLM compared to human analysts, we examine instances when human analysts are likely

to struggle with accurately forecasting earnings. In particular, we identify instances where

analyst forecasts are likely to be biased or inefficient ex ante. We also consider instances in

which analysts tend to disagree about future earnings (exhibit dispersion).

To estimate ex-ante bias (inefficiency) in analysts’ forecasts, we run cross-sectional re-

gressions of analyst forecast errors on the same firm characteristics as in Equation 2. We

then take the absolute value of the fitted values from this regression.

18

Consistent with prior

literature, forecast errors are defined as the difference between actual EPS and forecasted

EPS, scaled by the stock price at the end of the last fiscal year. In addition to ex-ante bias,

we measure the disagreement in analysts’ forecasts. Specifically, we use the standard devia-

tion of analysts’ forecasted EPS values, scaled by the stock price at the end of the preceding

fiscal year.

We then partition the sample based on the quartile values of analyst bias and estimate

Equation 3 for each group. The results are presented in Panel C of Table 3. By comparing

the coefficients in columns (1) and (2), we observe important differences. When the analysts’

bias is expected to be relatively low, GPT’s predictions receive a smaller weight (compared

to that in column (2) when the bias is expected to be higher), and the coefficient on analysts’

predictions is relatively large. These differences are statistically significant at the 1% level.

They suggest that GPT is more valuable in situations when human analysts are likely to

be biased. Similar results follow in columns (3) and (4) when we partition the sample on

analyst disagreement: GPT’s prediction receives more weight when analysts’ disagreement

is high and vice versa.

Taken together, our results indicate that GPT’s forecasts add more value when human

biases or inefficiencies are likely to be present.

5 Comparison with Specialized ML Models

So far, we have shown that GPT’s predictions largely outperform human analysts. As

human analysts are known to have a systematic bias in their forecasts, we raise the bar and

turn to more sophisticated benchmarks, including state-of-the-art machine learning models.

18

Note that errors should be unpredictable if forecasts are unbiased and efficient.

Financial Statement Analysis with Large Language Models 18

5.1 Methodology

Following Ou and Penman (1989) and Hunt et al. (2022), we focus on 59 financial vari-

ables obtained from the Compustat Annual database to predict future earnings but exclude

the price-to-earnings ratio for consistency reasons (stock price is not financial statement in-

formation). We perform two different prediction exercises: stepwise logistic regression and

ANN. In both cases, we use a rolling five-year training window. That is, we estimate (train)

the model using data from years t − 5 to t − 1, and apply the trained model to the year t

data to generate forecasts. By doing so, we ensure that the models do not learn from the

test data during the training phase. Since our sample spans from fiscal year 1962 to 2021,

we train 56 distinct models for each prediction method.

In the stepwise logistic regression, we follow Ou and Penman (1989) and only retain the

significant variables from the first step when performing the second step of the procedure.

The trained logistic regression then yields a probability value instead of a binary variable as

its output. We classify observations with a probability value higher than 0.5 as an increase

(and a decrease otherwise). In contrast to the logistic regression, the ANN model allows

for non-linearity among the predictors. Our model has an input layer with 59 neurons, two

hidden layers with 256 and 64 neurons each, and an output layer with two neurons (Kim

and Nikolaev, 2023a). The output layer produces a two-dimensional vector (p

1

, p

2

), and we

classify the outcome as an increase when p

1

> p

2

and vice versa. We use Adam optimizer,

ReLU activation function, and cross-entropy loss. We use batch training with a batch size of

128. All input variables are standardized. Missing continuous variables are imputed as the

year-industry average. We apply early stopping criteria with a patience of five epochs, which

indicates that the model stops training when there is no improvement in performance for

five consecutive epochs.

19

For each training phase, we assign a random 20% of the training

sample to the validation set and optimize the learning rate and dropout rate. Specifically,

we perform a grid search of nine iterations, using three learning rates (1e

−5

, 1e

−3

, and 1e

−1

)

and three dropout rates (0, 0.2, and 0.4).

5.2 Main Results

Overall Results We report the results in Table 4, Panel A, and Figure 3. Stepwise logistic

regressions following Ou and Penman (1989) achieve an accuracy of 52.94% and an F1 score

of 57.23%. We observe a considerably higher prediction accuracy using the ANN model.

The model achieves a 60.45% accuracy and an F1-score of 61.62%. This result highlights the

importance of non-linearities and interactions among financial variables for the predictive

19

The maximum allowed training epochs is set to 50 yet none of the models hit this limit.

Financial Statement Analysis with Large Language Models 19

ability of numerical information.

Consistent with the results in the analyst sample, our CoT-based GPT predictions achieve

an accuracy of 60.31%, which is on par with the specialized ANN model. In fact, in terms

of the F1-score, GPT achieves a value of 63.45%, which is the highest among all prediction

methods. This indicates a remarkable aptitude of GPT to analyze financial statements.

20

Not only does it outperform human analysts, but it generates performance on par with the

narrowly specialized state-of-the-art ML applications.

We further examine the possibility that ANN versus GPT performance is partly driven

by the slightly different input variables: we use balance sheet and income statement variables

for GPT, but 59 Ou and Penman (1989) ratios for ANN. Thus, to ensure that the results

are not an artifact of this choice, we also train an ANN model using the same balance sheet

and income statement variables. We scale balance sheet items by total assets and income

statement items by total sales. We also include change in revenue, change in lagged revenue,

change in total assets, and revenue scaled by total assets. The ANN model with financial

statement information achieves an accuracy of 60.12% and an F1-score of 61.30%, which are

slightly lower than those of GPT.

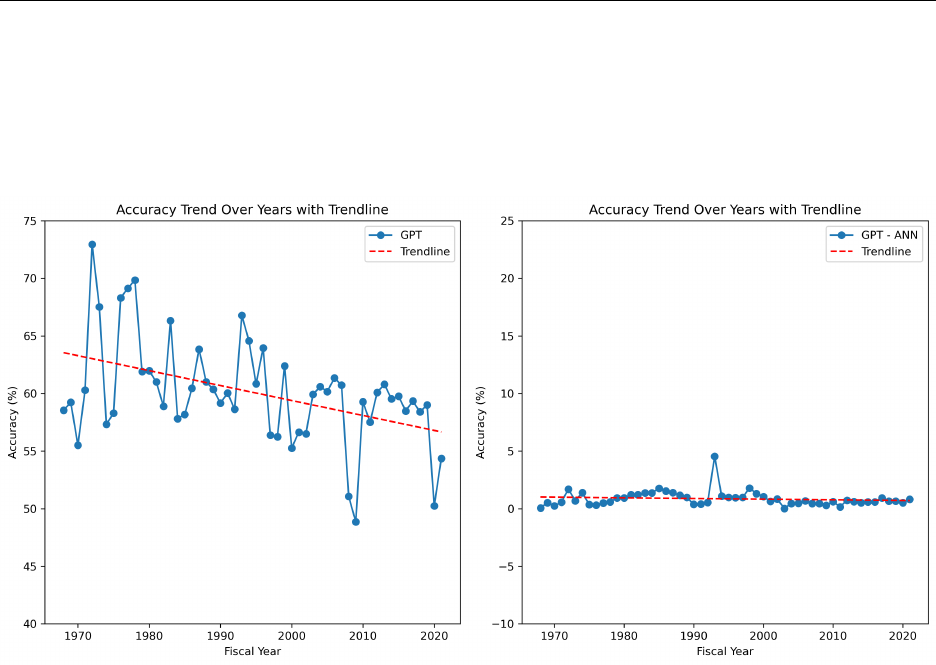

Time Trends We report the overall time trend of GPT’s and ANN’s prediction accuracy

in Figure 4 (detailed annual accuracy and F1-scores are reported in Appendix A). The left

panel shows a negative time trend in GPT’s prediction accuracy. In terms of the economic

magnitude, GPT’s accuracy has decreased, on average, by 0.1% point per year, which trans-

lates into a decrease in accuracy by 5.4 percentage points over the 54-year sample period.

Interestingly, we observe sharp drops in prediction accuracy in 1974, 2008-2009, and 2020.

These periods overlap with international macroeconomic downturns: the oil shock in 1974,

the financial crisis in 2008-09, and the Covid-19 outbreak in 2020. This result is comforting

as GPT should not foresee unexpected, exogenous macroeconomic shocks if its performance

is unrelated to memory.

21

Most importantly, in the right panel of Figure 4, we plot the

time-series trend of the “difference” in the accuracy of GPT and ANN models. ANN models

exhibit similar time trends compared to GPT with their annual differences fluctuating close

to zero. Thus, for both evaluation metrics, we find a negative and statistically significant

time trend, implying that it has become increasingly difficult to predict future earnings using

only numeric information.

22

20

GPT outperforms stepwise logistic predictions at 1% level. However, the difference between GPT and

ANN performance is not statistically significant at conventional levels.

21

We discuss this potential issue more formally in Section 6.1.

22

This result corroborates Kim and Nikolaev (2023a), who find that the informational value of narrative

context in predicting future earnings has increased over time.

Financial Statement Analysis with Large Language Models 20

Sources of Inaccuracy Next, we explore which firm characteristics are associated with

the likelihood of making incorrect earnings predictions. Column (1) of Table 4 focuses

on the accuracy of GPT’s predictions and is consistent with our findings for the analyst

sample (Table 3). We then report the determinants of the incorrect predictions by ANN

and logistic regression models in columns (2) and (3), respectively. Both the ANN and

logistic regression are also more likely to generate inaccurate predictions when firms are

smaller, have higher leverage, record a loss, and have higher earnings volatility. However,

interestingly, ANN is relatively more likely than GPT to make inaccurate predictions when

firms are smaller and record a loss. A one standard deviation decrease in firm size reduces

GPT’s prediction accuracy by 3.4 percentage points. In contrast, the same change in firm

size is associated with a 5.5 percentage point decrease in prediction accuracy for the ANN

model. The difference between the two coefficients is statistically significant at the 1% level.

Similarly, the coefficients on Loss and Earnings Volatility are statistically different at the

5% level. The differences between logistic regression and GPT predictions are even more

pronounced. These findings hint at the ability of GPT to make better predictions for less

common data patterns (e.g., loss-making firms), presumably due to its ability to rely on its

conceptual knowledge and theoretical understanding of business.

Incremental Informativeness While GPT’s performance is comparable to that of an

ANN, we also examine whether GPT conveys incremental information when compared to

specialized ML models. This analysis is reported in Panel C. In columns (1) to (3), we show

that across all models, predicted earnings changes, individually, are positively associated

with the actual changes. In column (4), when both GPT and ANN forecasts are included

simultaneously, both remain statistically significant and hence contain incremental informa-

tion. Interestingly, the coefficient on ANN becomes one-third in magnitude (compared to

column (2)) and its statistical significance deteriorates (from a t-statistic of 3.69 to 2.36),

whereas the coefficient on GPT remains stable. This result suggests that GPT captures some

additional dimensions of information than non-linear interactions among financial variables

when predicting future earnings, e.g., external theoretical knowledge.

5.3 Confidence, Magnitude, and Generalizability

5.3.1 LLM’s Confidence

Method We estimate the confidence of LLM’s answers based on two methods. First, we

explicitly instruct the model to report a confidence score on its earnings prediction, with one

being perfect confidence and zero being a pure guess (Bybee, 2023). Second, we compute

Financial Statement Analysis with Large Language Models 21

an alternative confidence score based on token-level logistic probability values, which we

directly take from the probability vector provided by the model. Specifically, we average the

logistic probability values across all output tokens to measure the overall certainty of the

model answer.

Results For both approaches, we report prediction results of the high confidence (fourth

quartile) and the low confidence (first quartile) groups. We present the results in Figure 5 and

columns (1) to (4) of Table 5. The model performs better when it reports greater confidence.

In the high confidence group, the model achieves an average accuracy of 62.44% (63.15%)

based on the reported confidence value (confidence score derived from logistic probabilities),

which is approximately 2.6 (4.6) percentage points higher than the corresponding accuracy

of the low confidence group. We find similar results based on the F1 score. Overall, this

result indicates that the model is capable of distinguishing between instances where earnings

are more predictable.

5.3.2 Magnitude

Method Recall that we also instruct the model to provide the expected magnitude of

earnings change: “large”, “moderate”, or “small.” As in Ou and Penman (1989) and Hunt

et al. (2022), we expect the model to be more accurate in determining the directional change

when it predicts large rather than immaterial changes.

Results We present the results in Figure 5 and columns (5) and (6) of Table 5. We find that

the average accuracy is 62.03% when the model predicts large changes whereas it decreases

to 60.22% for small changes. We document a similar pattern for F1 scores: 61.16% for large

changes vs. 57.95% for small changes. Overall, when the model expects a larger change, its

directional predictions are more accurate.

5.3.3 LLM type

Method We also test whether the capabilities associated with a specific LLM type deter-

mine its predictive ability. In the main analysis, we use the most recent version of GPT,

GPT-4-turbo. We also experimented with a less powerful LLM version from the same fam-

ily, GPT-3.5-turbo, and otherwise used the same experimental settings. In addition, we also

explored another family of LLMs provided by Google, namely, Gemini Pro 1.5 (also with

the same experimental settings). Due to considerable processing time, we choose a random

20% sample for this set of analyses.

Financial Statement Analysis with Large Language Models 22

Results We present the results in Figure 5 and Table 5, columns (7) to (9). GPT 4

achieves the best performance, followed by Gemini 1.5, and GPT 3.5. Gemini 1.5 achieves

an overall accuracy of 59.15%, which is close to that of GPT 4 (61.05%) in the same 20%

sample. However, GPT 3.5 achieves an accuracy of only 52.29% and an F1-score of 59.17%,

which are all substantially lower than our GPT 4 benchmarks. We also find that the outputs

of GPT 4 and Gemini 1.5 are largely overlapping with only 1,808 out of 30,135 firm-years

(approximately 6%) having opposing predictions. Overall, this analysis suggests that our

findings are not confined to a specific family of LLMs. Although the final prediction results

largely rely on the performance of the backbone language model, recent generations of LLMs

are capable of analyzing financial statements and making informed decisions.

6 Where Does an LLM’s Predictive Ability Come From?

In this section, we aim to understand the sources of GPT’s predictive ability. We explore

two broad explanations. The first explanation is that GPT’s performance comes from its

memory, e.g., due to the model’s ability to identify the company based on numeric data.

We aim to rule out this possibility as it undermines the integrity of the model’s predictions.

Another explanation is that the strength of the model is in its ability to generate narrative

insights based on its analysis of numeric data. We explore each of these possibilities next.

6.1 Is There a Look-ahead Bias in the Model?

An important concern with the reliance on a pre-trained large language model in a

prediction task is its potential for a look-ahead bias (e.g. Sarkar and Vafa, 2024). For

example, the model may have been trained on the company-specific financial data and,

hence, already may “know” the answer as to whether earnings increased or decreased in the

future (or have a general sense of how well the company did over time). Our research design

is relatively immune from this potential bias (e.g. Glasserman and Lin, 2023) because we use

a consistent anonymized format of financial statements across firms. This makes it virtually

impossible for the model to infer a firm’s identity from the structure of financial statements

or specific account names. We also ensure the statement does not contain any dates and use

relative years, i.e., t or t − 1. This later mitigates the concern that the model has knowledge

about macroeconomic trends in a specific year and uses it to predict future earnings. To

appreciate this issue, imagine that the model was able to match a given set of financials to

2007. In this case, the model could draw on its knowledge of the major economic downturn

in 2008 and adjust its prediction accordingly.

Even though the anonymous nature of financial statements should prevent the model from

Financial Statement Analysis with Large Language Models 23

“guessing” the entity, we perform two formal analyses to further rule out this concern.

23

Can GPT Guess Firm Name and Year? In this set of tests, we instruct the model

to make guesses about the firm or year based on the financial statements that we provide.

Specifically, we ask the model to provide the ten most probable firm names and the most

probable fiscal year. Additionally, we force the model to produce outputs even when it

believes that it cannot make any informed guess.

For economic reasons, our first set of experiments does not include any chain-of-thought

prompts. We perform this experiment on 10,000 random observations. The results are

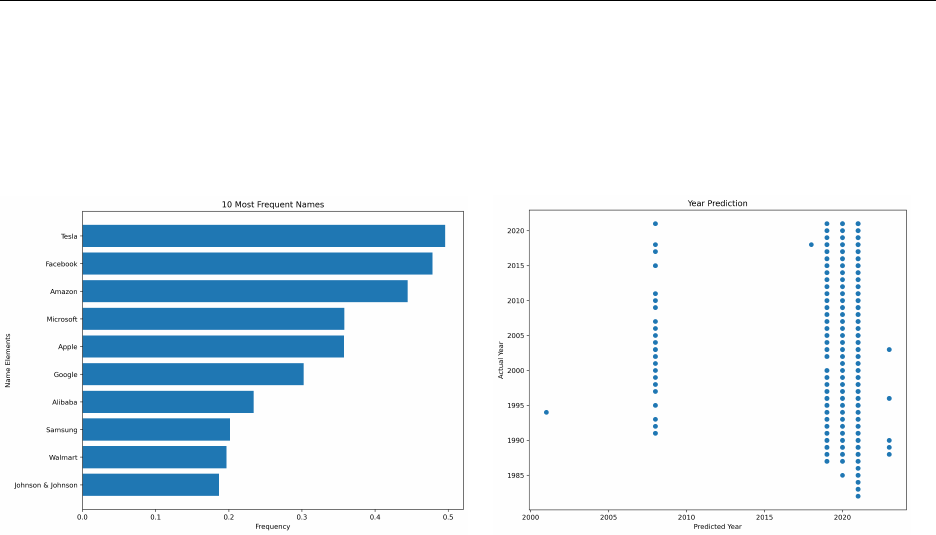

presented in Table 6, Panel A. We find that the model correctly identifies the firm name

with an accuracy of 0.07%, which is lower than the accuracy of a random guess from the

population of names in our data. In Figure 7 left panel, we plot the ten most frequently

produced firm names. We find that the model almost always predicts the same set of ten

firms, including Tesla, Facebook, and Amazon. This result is consistent with the model’s

training objective to produce the most probable words (name in this case) conditional on its

information. Absent an informative prior, the model is likely to predict the most visible or

popular firms in its training corpus.

The accuracy of correctly guessing the year of financial statements is 2.95%. In the right

panel of Figure 7, we plot the actual fiscal year and GPT’s prediction in one plane. We

observe that almost all predictions are 2019, 2020, or 2021 independent of the actual year,

which is inconsistent with the model’s ability to guess the year.

24

In the second set of experiments, we use the exact same chain-of-thought prompts as

in the main analysis, but then ask the model to guess the firm name and year (instead of

predicting earnings). We use a random sample of 500 observations. Panel B of Table 6

contains the results. The findings confirm very low accuracy and thus address a potential

concern that the CoT prompt is more capable of invoking the model’s memory. Taken

together, our results strongly suggest that the model cannot make a reasonable guess about

the entity or the fiscal year from the anonymous financial statements. Therefore, it is highly

unlikely that the model is inadvertently using its “memory” about financial information to

make earnings predictions.

Analysis outside of GPT’s training window As suggested in Sarkar and Vafa (2024),

the most effective way to rule out the model’s look-ahead bias is to perform a test outside

23

Compared to de-identified financial statement data, anonymizing textual data is conceptually more

challenging. Textual data, such as earnings calls, may still retain sufficient contextual information that

potentially allows the model to guess the anonymized firm.

24

Refer to Appendix D for computing the accuracy of a random guess.

Financial Statement Analysis with Large Language Models 24

of the model’s training window. OpenAI’s GPT4-Turbo preview was trained on data up to

April 2023, thereby significantly limiting the scope to conduct this analysis. Nevertheless,

we use financial statement data from fiscal year 2022 (released in January-March 2023) to

predict earnings of fiscal year 2023 (released in early 2024).

We present the results in Table 6, Panel C. As a comparison, we also report prediction

results of the logistic regressions, analyst predictions, and ANN models. GPT achieves an

accuracy of 58.96% and an F1 score of 63.91%. The accuracy (but not the F1 score) is slightly

lower than the average reported in Table 4, Panel A. However, recall that we find an overall

decreasing time trend in GPT’s prediction accuracy. Specifically, as shown in Appendix

A, GPT’s prediction accuracy is only 54.36% for the fiscal year 2021, and 59.01% for 2019

(GPT’s prediction accuracy plummets in 2020 during Covid-19 outbreak). In fact, both

the out-of-GPT-sample accuracy and the F1 score are substantially higher than the average

over the last 10 years (58.01% and 59.15%). Therefore, we interpret our results as GPT’s

out-of-sample performance being closely in line with our “in-sample” results. Furthermore,

GPT achieves a very similar accuracy score out-of-sample as the ANN model (58.96% versus

59.10%) and an even higher F1 score (63.91% versus 61.13%) for the same year, which is

closely in line with our main findings. Taken together, this result corroborates our prior tests

and confirms that the model’s predictive ability does not stem from its training memory.

6.2 Are LLM-Generated Texts Informative?

Next, we explore whether the model’s predictive ability comes from its ability to generate

narrative insights about the financial health and future performance of the company, in

line with its objective to analyze financial statements. We leverage the fact that our CoT